There are many ways of testing the usefulness and power of an AI.

Currently, there are good examples of, for example, trying to draw stuff, create programs, and so on and so forth.

I would like AI to increase their ability to help humans via better detection of visual information. This can make a big difference for people who have temporary or not temporary impaired visual abilities, as well as being a time saver.

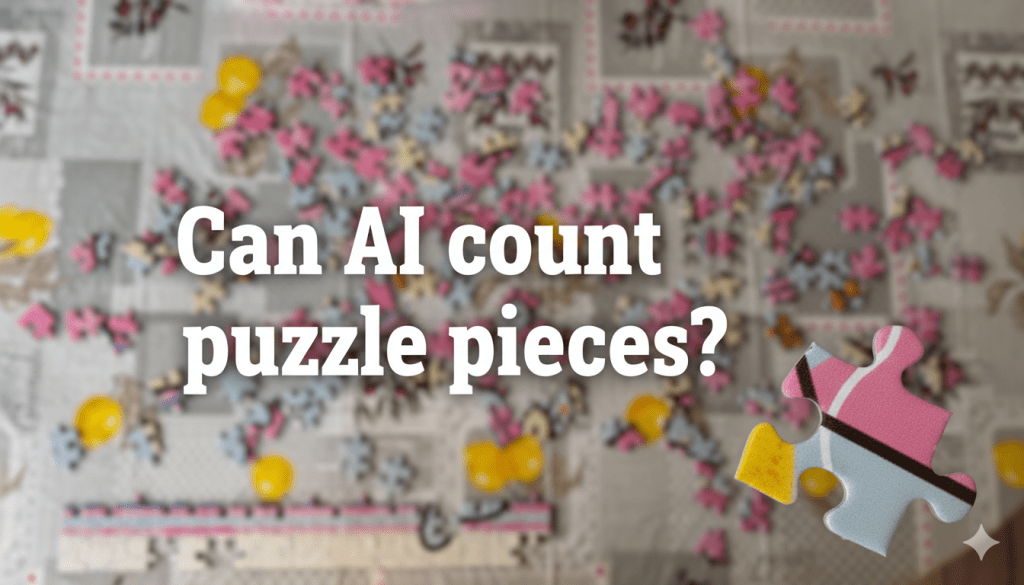

When I was on vacation a couple of weeks ago, we found an old puzzle, and we tried to finish it with my daughter. At first it was very hard, because I couldn’t find the pieces.

Yet, the puzzle was simple, with only 200 pieces, so why couldn’t I find the pieces?

Turns out, some pieces were missing.

And so with this in mind, I figured, could AI help me? (Spoiler: it didn’t. Go to the end to see the rankings).

I decided that this could be a good test to try from time to time with current state-of-the-art models and see how they rank, by using the same image (of that puzzle), with the same prompt (kind of what Simon Willison does with Pelicans :), but less fancy).

The image

The image makes it clearly difficult to count stuff, mostly because the background is noisy and not clear.

But that’s the whole concept. And why is this interesting? Because we want it to be difficult, we don’t want an easy test.

Also, I’m intentionally not writing the number of the pieces because, as you may know, AI can be trained on this information (but feel free to count them, just don’t write the number in a comment 😀 )

If I’ll ever see that they have been trained on the actual number to give the result, I will use a different puzzle.

The prompt is the same for every tool

Count the puzzle pieces in this photo

The replies

Gemini 2.5 Pro

Based on a careful count of the image, there are approximately 251 puzzle pieces visible.

Breakdown of the Count

- Assembled Pieces: There are 41 pieces that have been assembled at the bottom of the image.

- Loose Pieces: There are approximately 210 loose pieces scattered across the rest of the table.

Counting the exact number of loose pieces is challenging due to them overlapping, but 251 is a very close estimate.

Gemini 2.5 Pro reply

Claude 4.1 Opus

I’ll carefully count the puzzle pieces scattered across this patterned surface.

Let me systematically go through the image, counting the visible puzzle pieces by color:

Pink pieces: Scanning across the image, I can count approximately 45-50 pink puzzle pieces of various sizes scattered throughout.

Light blue/gray pieces: There appear to be approximately 25-30 light blue or gray colored pieces.

Cream/beige pieces: I can identify approximately 20-25 cream or light beige colored pieces.

Yellow pieces: There are several distinct yellow pieces that appear to be from a different puzzle or section, approximately 8-10 pieces.

Along the bottom: There’s a connected row of pieces forming a partial border, which contains approximately 15-20 pieces.

Adding these together, I count approximately 120-135 individual puzzle pieces in total visible in this image.

The pieces appear to be from at least one or possibly multiple puzzles, with the majority being pink, blue, and cream colored pieces scattered across what looks like a decorative tablecloth or surface with a vintage pattern featuring cherries and other designs.

Claude 4.1 Opus Reply

GPT 5 High Reasoning

Approximately 240 puzzle pieces are visible in the photo (including the connected edge strip at the bottom). There may be a small ±5 margin due to overlaps and limited zoom.

If you want an exact count, share a higher-zoom image or let me annotate/mark as I count.

Results & Scoring

Here are the results for each model

- Gemini 2.5 Pro: 210 pieces

- Claude 4.1 Opus: 120-135 pieces

- GPT-5 High Reasoning: 240 pieces

And our question is:

Can AI Count Puzzle Pieces (September 2025)

Well, turns out they can’t. All models failed to count, or get actually really close to the actual count with something that could be considered a valid answer.

Which model came closer to the result?

Gemini 2.5 Pro was the model that approximated the count better, although it should be noted that this is a 200 piece puzzle, and therefore it was still super-wrong.

So, considering this, here are the rankings (no winner, yet)

- Gemini 2.5 Pro

- Claude 4.1 Opus

- GPT-5 High Reasoning

We’ll see if things improve in the future.