I remember back in the days when I was seriously considering switching off some online services. I had grown less and less interested in being tracked and having my activity aggregated and, potentially, misused. This journey was also part of how I learned about marketing and what opportunities exist in that space.

One of the things I didn’t like then, and I’m still personally not a big fan of, is the remarketing approach. You know the one: you visit a site, and from that moment on, you’re presented with advertisements about that same site until you either buy something (so the ads stop) or start using ad blockers.

While I’m actually a believer that good marketing doesn’t necessarily need this aggressive approach, I also realize that sometimes, to make a purchase happen, you need to be pushy with marketing. I don’t agree with those tactics, but that’s not really the point of this discussion.

Enter AI (yes, I know, it’s everywhere)

What was interesting to me is that with the advent of AI, I reconciled a lot of things.

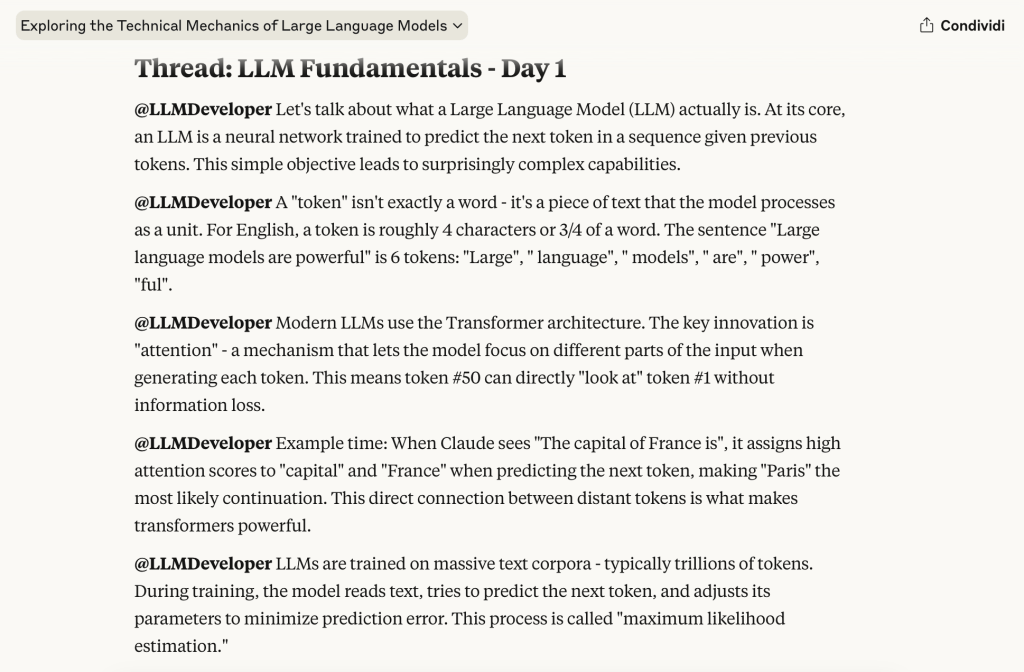

One of the reasons why AI can be so powerful is that the more context it has, the more it can learn, be helpful, and understand how you work and what could work for you. The benefits are absolutely incredible on some levels. That’s why some people suggest doing long-form chatting or talking with AI, when you ask detailed questions with lots of context, the replies you get back are much more relevant.

This shows up in some amusing contradictions. Yesterday, I saw a LinkedIn post where someone asked: “I need to wash my car, and the car wash is only 50 meters away. How should I get there?”

One AI said “by walking.” Another said “by car, because you need to wash the car after all.”

The problem here is obvious: we expect AI to get the answer right, but the right answer is based on context.

The real question was “how do I go there to wash the car, knowing I’ll need to take the car with me?” but that wasn’t explicitly asked.

It’s all about assumptions. If you provide all the context, “I need to wash my car AND I need to decide how to get there so I can get it washed”, you’d clearly see how the question was being tricky, and AI would probably give you a proper answer.

Switching Away was not (only) about tracking

So what’s the point of all this? It made me re-evaluate many of my concerns about sharing data.

After thinking a lot about it, I realized that my problem was that I wasn’t getting enough value out of the services. Maybe they weren’t providing enough value for me. All that tracking just to give me ads, to track me more, to sell me more stuff… that kind of intelligence wasn’t giving me what I needed. It wasn’t providing value to me as a customer.

Nowadays, things are shifting. The value you might get from AI is so vastly different, so powerful, that it might genuinely change some people’s lives.

I believe that choosing a service or product has always been about the value it provides. There’s always a trade-off: how much am I getting versus what am I paying? Sometimes you’re getting something for free, but you’re paying with your personal information. The famous saying “if the service is free, you are the product” still stands.

At the same time, it’s a trade-off you might revisit because you’re getting so much value out of it. But until you get there, and the value isn’t that significant, it’s all about understanding: is this giving me enough or not?